Introduction

Venture capitalist Marc Andreessen described DeepSeek's R1 model as AI's "Sputnik moment," referencing the Soviet Union's 1957 satellite launch that spurred the U.S.-USSR space race. He emphasized that DeepSeek R1 is "one of the most amazing and impressive breakthroughs" he's ever seen and, being open source, considers it a "profound gift to the world.”

On October 4, 1957, a small metal sphere sparked a global revolution. Sputnik 1 wasn't particularly sophisticated. It did little more than emit radio beeps as it orbited Earth. But those beeps changed everything. They triggered a technological arms race, massive government investments, and a complete reimagining of what humanity could achieve in space.

Last week, DeepSeek's R1 announcement sent similar shockwaves through the AI community. Like Sputnik, the technical details of R1 are less significant than the broader implications of its launch. DeepSeek’s achievements signal the start of AI's own era of accelerated innovation and strategic recalibration across industries. From national security concerns to transformations in tech and energy sectors, the world is beginning to realign itself in response to this moment. The parallels to the space race highlight how pivotal technological milestones can reshape economies and geopolitics.

Unlike the original Space Race, this isn't limited to superpower rivalry. The implications ripple across every sector of the global economy. AI’s evolution is influencing infrastructure, market strategies, and regulatory frameworks. While Sputnik’s signals lasted only a few weeks, DeepSeek’s impact may shape technology and society for decades to come.

The New Currency: Compute Demands

The market’s panic over NVIDIA reveals a fundamental misunderstanding of AI compute dynamics. There's a growing narrative suggesting that because DeepSeek achieved breakthrough results with significantly less compute, the rush to build massive GPU clusters will slow. This couldn't be further from the truth.

DeepSeek demonstrated what’s possible using just a fraction of the resources that frontier labs like OpenAI have used, thanks to optimizations in distributed training and model architecture. While OpenAI's early GPT models demanded exponentially more compute, DeepSeek’s efficiency breakthrough will not curb demand — it will amplify it. When barriers to entry lower, more players flood the market, and demand scales rapidly. Hyperscalers have been anticipating this surge, and now they are scaling infrastructure to accommodate millions of new workloads.

Every major tech company is reassessing its compute requirements. Ambitious startups are strategizing to secure more training capacity, and research labs are updating hardware roadmaps. The market's pullback on NVIDIA's stock is a short-term reaction to an unfounded fear of reduced demand. The truth is that AI’s appetite for compute is only just beginning.

We're entering an era where compute capacity will be as strategically vital as oil reserves were in the 20th century. The real constraints are not demand or supply chain bottlenecks but power infrastructure and cooling capacity. The race is now for access to reliable power grids, efficient cooling, and strategic datacenter locations.

This explains Microsoft's AI datacenters in the Middle East, Google’s underwater cooling experiments, and AWS’s direct negotiations with power providers. Companies like OpenAI and tech giants are investing in nuclear and fusion energy startups. The smart money isn’t betting on reduced demand but on which players can scale infrastructure and secure energy resources the fastest.

Current demand is driven by large-scale model training, but the real tsunami of compute needs will come from inference at scale—running these models in production to serve millions of users and handle real-time interactions. DeepSeek proved that competitive models can be built without OpenAI's resources, but this only lowers the barrier to entry for competitors, leading to even greater demand for large compute farms.

Efficiency improvements won’t reduce compute consumption; instead, they’ll fuel the pursuit of more advanced capabilities. In AI, unlike traditional software, compute demands are theoretically limitless. Every capacity increase unlocks new applications, deeper reasoning, larger context windows, and continuous performance enhancements.

NVIDIA’s True Headwinds

While AI compute demand is accelerating, NVIDIA faces more complex challenges. Their current dominance is built on two pillars: hardware superiority and software lock-in. Both are under threat.

Competitors like Amazon (Trainium), Google (TPU), and Groq (LPU) are developing custom silicon optimized for AI workloads. These chips, purpose-built for tasks like parallel computation and real-time inference, are strategic plays aimed at reducing dependency on NVIDIA. Amazon's collaboration with Anthropic, for example, has resulted in Project Rainier, a supercomputer powered by over 100,000 Trainium 2 chips. Google’s TPU v5e platform supports configurations of up to 256 interconnected chips and can scale workloads across multiple clusters using their Multislice technology. Groq’s LPU Inference Engine boasts up to 18 times faster throughput than leading cloud providers, giving DeepSeek and others an edge in real-time AI applications.

However, NVIDIA’s real strength isn’t just in GPUs — it’s in CUDA, their proprietary parallel computing platform. CUDA is deeply integrated with NVIDIA’s hardware, enabling developers to optimize performance and scalability, which has entrenched NVIDIA in the AI ecosystem. This software advantage is a deeper moat than any hardware lead. Yet, history shows that software monopolies rarely last forever, and pressure is mounting to break this dependence, particularly as AI compute diversifies beyond traditional datacenters.

To maintain their leadership, NVIDIA must evolve from a 'GPU company' to a full-scale AI platform provider. Their investments in foundational models, agentic systems, autonomous vehicles, and humanoid robotics reflect this strategic shift. Platforms like Isaac Robotics showcase their work on specialized chips for edge AI applications. Initiatives such as Project GR00T, which develops models tailored for humanoid robots, further signal their commitment to advancing AI hardware and software for diverse environments.

NVIDIA is not threatened by DeepSeek’s advancements or efficiency gains in model training. In fact, the company stands to benefit significantly from the expanding ambitions of major players like OpenAI with its Stargate supercomputing initiative, xAI’s infrastructure expansions in Texas and Atlanta, Meta’s aggressive datacenter investments, and the upcoming rollout of the Blackwell chip. While the market will eventually recognize and adjust to this reality, NVIDIA’s long-term success will still depend on its ability to navigate the growing competition and technological headwinds effectively.

Beyond Hype: Reality Sets In

Let's talk about what efficiency really means in AI development. DeepSeek achieved remarkable results with fewer resources than frontier labs. But "fewer resources" in AI is like saying SpaceX made rockets cheaper — we're still talking about massive scale.

Imagine if every time you wanted to test a code change, you had to wait months instead of minutes. That's the reality of AI development today. DeepSeek reported $6 million for their final training run, but that's just the compute costs. When you factor in research, development, infrastructure, and talent, the real investment likely approaches what Anthropic's Dario Amodei estimates: between $100 million and $1 billion for advanced model development.

DeepSeek's evolutionary breakthroughs came from a mix of necessity and innovation. Some optimizations were born from export restrictions, others from pure technical insight. But here's what most miss: the closed-source frontier labs guard their techniques zealously. What looks revolutionary in public might be standard practice behind closed doors.

What we do know is this: the experiments will continue. Models will evolve. Techniques will improve. Each breakthrough, whether public or private, doesn't reduce compute demand; it opens new frontiers. DeepSeek showed what's possible with creative constraints. Now watch as the entire field absorbs these lessons and pushes even further beyond.

Capabilities

DeepSeek-R1 has demonstrated strong performance in tasks such as mathematics, coding, and reasoning. Smaller distilled versions of the model may offer practical advantages for local deployments, where larger models like OpenAI's o1 may not be feasible. One such application is serving as an intelligent router or orchestrator in an agentic system, where advanced local reasoning capabilities are critical. Its chain-of-thought (CoT) reasoning method allows the model to display problem-solving processes, which can be beneficial for training smaller models and debugging complex tasks.

Additionally, DeepSeek’s use of creative constraints, including optimizations influenced by export restrictions, showcases its capacity to drive innovation with limited resources. This has positioned it as a competitive player in the AI space, especially for organizations seeking alternatives to Western-developed models.

Limitations

However, DeepSeek-R1 is not without significant limitations. First, it lacks multimodal capabilities, unlike competitors such as OpenAI’s o1, which can process both text and images. This restricts DeepSeek’s versatility across domains that require integration of diverse data types.

The model also faces criticism for censorship and content restrictions. DeepSeek-R1 tends to avoid sensitive political topics and aligns its responses with Chinese government narratives. This bias raises concerns about content neutrality and limits its applicability in scenarios where unbiased outputs are crucial.

Another major drawback is the model’s vulnerability to various security threats. Research has shown that DeepSeek-R1 can be manipulated to generate harmful content, including hate speech and insecure code. It is susceptible to attacks such as prompt injections, jailbreaks, and glitch tokens (sequences of characters that can exploit weaknesses in language models to generate unexpected or harmful outputs), posing risks for enterprise deployments.

Risks

Security and data privacy are major concerns. Organizations deploying DeepSeek-R1 on DeepSeek’s infrastructure risk exposing sensitive data due to platform vulnerabilities. For those opting for local deployment, enabling trust_remote_code introduces further risks by allowing the execution of external code, potentially opening the door to malicious exploits.

There are also legal risks tied to the origins of the model’s training data. Questions around data sourcing and compliance with international regulations could lead to complications for companies using the model, particularly those operating in multiple jurisdictions.

Furthermore, DeepSeek’s privacy policies indicate that user data may be stored on servers located in China. This has heightened concerns over surveillance and data security, especially for users outside the country.

A Notable Advancement with Sharp Edges

DeepSeek-R1 represents a significant advancement in AI, particularly in cost-efficiency and creative optimization under constraints. It is also one of the most advanced reasoning-focused open models currently available, which adds to its appeal for research and local applications. However, its limitations and risks cannot be ignored. Organizations should proceed with caution, thoroughly evaluating security vulnerabilities, legal implications, and content bias before adopting the model. Strong safeguards and continuous assessments are essential to ensure that the potential benefits of DeepSeek-R1 are not overshadowed by its risks.

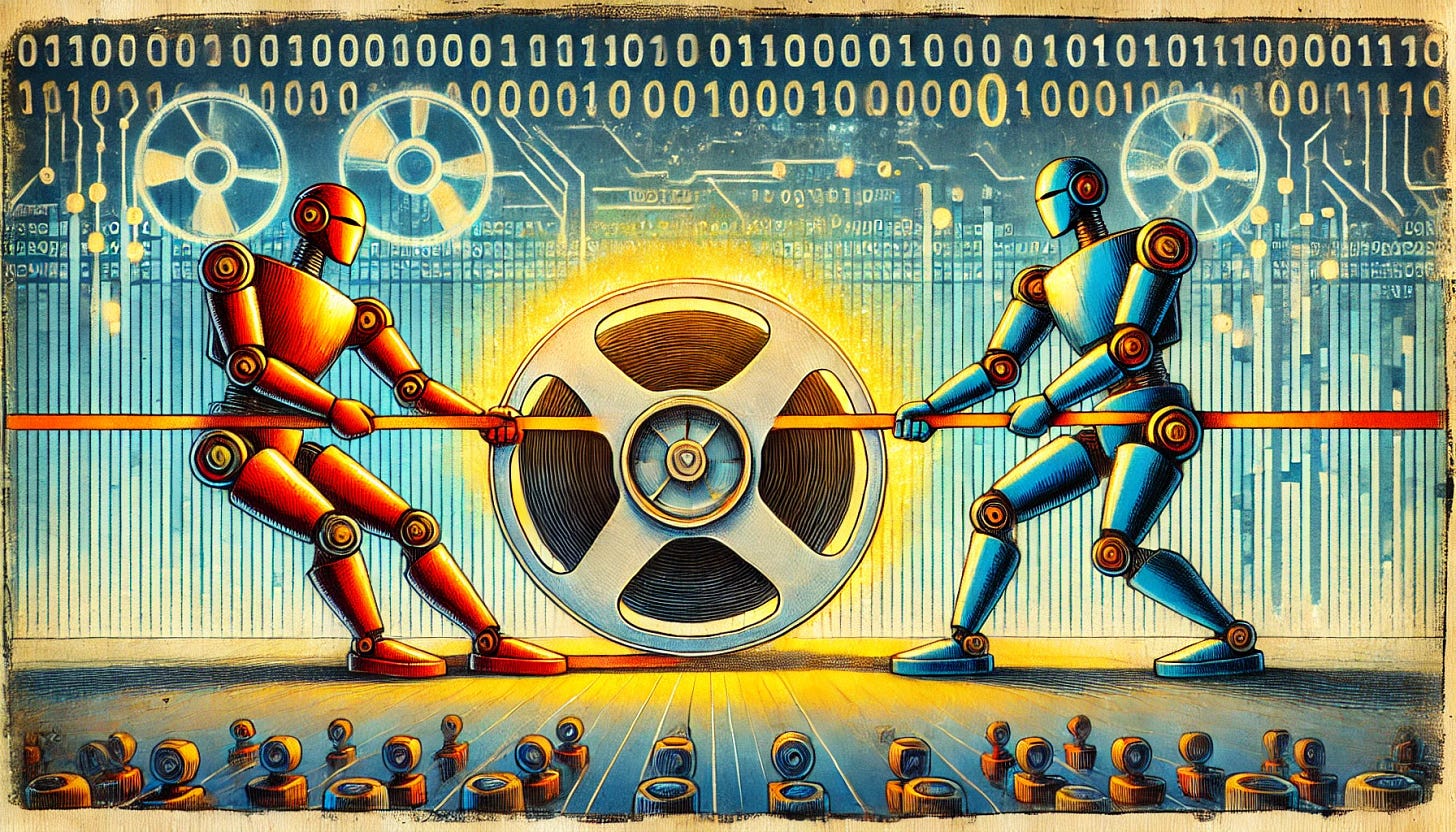

Training Data Wars

The story becomes even more interesting when we turn our attention to training data. Evidence suggests that DeepSeek may have used OpenAI’s outputs to train its own model, igniting a growing battle over the future of AI training data. OpenAI’s recent stance on data usage not only appears hypocritical but also underscores a paradox at the heart of AI development.

OpenAI stakeholders and prominent industry voices, including Vinod Khosla and David Sacks, have accused DeepSeek of "theft," citing "substantial evidence" of unauthorized data use. However, proving this claim is difficult, given how thoroughly OpenAI’s outputs have diffused across the open web. But the real issue isn’t just about attribution - there’s a deeper paradox surrounding AI training practices.

While DeepSeek’s alleged use of OpenAI outputs may violate terms of service, OpenAI itself has trained models on vast amounts of copyrighted material. Even OpenAI developers have acknowledged that it's nearly impossible to build competitive models without using copyrighted content. The irony of asserting intellectual property rights over outputs built on others' IP has not gone unnoticed in the AI community.

The challenge facing foundational model developers goes even deeper. We are approaching "peak data," a term coined by former OpenAI chief scientist and Safe Super Intelligence co-founder Ilya Sutskever. This refers to the point where we exhaust the supply of high-quality human-generated content suitable for training. The current pace of AI development far exceeds our ability to create new, authentic training data. This looming scarcity is pushing the industry toward synthetic data generation. Yet this creates a recursive dilemma: if foundational models were trained on copyrighted content without permission, can those same models then claim unfair use of their outputs when others generate synthetic data? We’ve entered a legal and ethical maze with no clear exit.

This crisis isn’t just a theoretical debate. Data scarcity raises upper bound limit questions for AI development. As traditional data sources dwindle, the industry faces a critical challenge: how can we sustain the insatiable demand for training data when every potential solution introduces new legal and ethical complications?

While DeepSeek achieved remarkable compute efficiencies, they faced the same fundamental data issues as the rest of the industry. Like others, they apparently relied heavily on synthetic data, underscoring the impending crisis Sutskever warns about. Compute efficiency alone cannot solve this problem. The industry is approaching a data wall that optimization can’t overcome. To continue advancing AI, we need fundamentally new approaches to data. These have yet to emerge.

Competitive Landscape in Overdrive

We're witnessing the start of a new phase in AI development – one where efficiency innovations are reshaping competitive dynamics in unexpected ways.

Accelerated Release Cycles

The traditional 6-12 month model iteration cycle is dead. DeepSeek's breakthrough has triggered a cascade of accelerated releases, with major players rushing to demonstrate their own efficiency gains. This is more than keeping up with competitors. Providers must now prove their ability to innovate rapidly under constraints. The real race isn't about raw performance anymore; it's about finding novel approaches to squeeze more capability from limited resources.

For companies like OpenAI, Anthropic and xAI, the stakes are even higher. With billions invested in massive GPU clusters, they face mounting pressure from investors to prove that their infrastructure spending remains a competitive advantage, not a liability. If they can successfully integrate DeepSeek's efficiency innovations while leveraging their superior compute capacity, they could accelerate the development of even more advanced multimodal reasoning models. This would not only validate their infrastructure investment strategy but potentially widen the capability gap between frontier labs and their competitors. The pressure is intense. Now they must demonstrate that massive compute resources, when combined with these new efficiency techniques, can drive innovation at an unprecedented pace.

The Rise of Chinese AI Powerhouses

DeepSeek's emergence signals a broader shift in the AI landscape, but it's just one piece of a larger story unfolding in Chinese AI development. Alibaba's recent Qwen 2.5 Max release demonstrates comparable performance to GPT-4 on key benchmarks, while operating under strict hardware constraints. Meanwhile, Huawei's move to support DeepSeek inference on their Ascend AI GPUs shows how Chinese companies are building complete, vertically-integrated AI stacks – from model architecture to custom silicon.

While the headlines are focused on individual achievements, we are witnessing the emergence of a parallel AI ecosystem. Huawei’s Atlas AI computing solution, ByteDance's machine learning infrastructure innovations and Baidu's optimization breakthroughs complement these developments. Chinese AI companies aren't just replicating Western approaches; they're pioneering new paths born from necessity and regulatory constraints.

The implications extend beyond technical achievements. These companies are showing they can compete at the frontier while operating under strict export controls and resource constraints. Huawei's Ascend AI chips, optimized specifically for models like DeepSeek, prove that custom silicon developed under constraints can deliver competitive performance. This validates a key thesis: you don't need unrestricted access to cutting-edge Western chips to push the boundaries of what's possible.

DeepSeek's Trajectory and National Implications

DeepSeek's success has far-reaching implications for national AI strategies. By building competitive models with significantly less compute, they may provide a blueprint for countries facing similar constraints, including arms-embargoed nations like Iran and Russia. This "efficiency-first" approach is likely to gain traction, with more companies and nations adopting similar strategies or turning to China to bolster their AI initiatives. This shift could reshape the global AI development landscape in profound ways.

ByteDance's Accelerating AI Ambitions

ByteDance's recent moves demand close attention. While they've long been known for building one of the world's most sophisticated recommendation engines, their AI ambitions now extend far beyond content optimization. Recent reports reveal ByteDance is making significant investments in reasoning-focused AI development, directly challenging the capabilities traditionally dominated by Western labs.

The announcement of Doubao-1.5-pro this week demonstrates just how serious these ambitions are. The model's "Deep Thinking" mode outperforms OpenAI's latest models on the AIME benchmark, while its MoE (Mixture of Experts) architecture achieves remarkable efficiency, delivering dense model performance with just one-seventh of the activated parameters. MoE is a neural network design where different subsets of parameters are activated depending on the task, improving efficiency by using fewer resources per inference. This means Doubao can match the capabilities of a 140B parameter model while only activating 20B parameters at a time. Their heterogeneous system design for prefill-decode and attention-feedforward operations shows ByteDance is not only innovating on model architecture, but they're also rethinking the entire inference stack.

Their approach is characteristically pragmatic yet ambitious. Rather than simply chasing raw performance metrics, ByteDance is targeting specific reasoning capabilities that could differentiate their models in practical applications. Their internal AI infrastructure, which already rivals major cloud providers, gives them a strong foundation for this push.

What makes this particularly interesting is ByteDance's history of turning technical innovations into user-facing products at rapid pace and scale. If they can successfully combine their expertise in deployment and optimization with these advances in AI reasoning and efficiency, they could raise expectations about what's possible in consumer AI applications.

Frontier Companies: The Response

The established AI leaders aren't standing still. Their response to DeepSeek's breakthrough reveals evolving priorities and new competitive dynamics.

Pricing Pressures and Market Dynamics

OpenAI’s pricing strategy has shifted aggressively toward higher rates rather than cost-cutting. They introduced a $200/month Pro plan for premium access to models and features like Operator and are rumored to be planning a significant hike in the Plus plan, potentially raising it from $20 to $44/month this year. These pricing strategies are now likely under heavy scrutiny as efficiency gains from competing models create downward pricing pressure across the industry. To remain competitive, OpenAI may need to reevaluate these plans and find new ways to differentiate beyond raw model performance.

The Push for Efficiency at Scale

Frontier labs are now racing to apply DeepSeek's insights at massive scale. If these efficiency gains can be replicated with larger models, we could see another leap in capabilities. The key question isn't whether these techniques work – it's whether they can scale to trillion-parameter models without losing their benefits.

New Focus on Reasoning Capabilities

Reasoning has been at the forefront of AI development since OpenAI's Q* rumors, Strawberry tease, and O1 release. DeepSeek’s performance doesn't shift focus to reasoning but instead resets expectations around what is required to train these capabilities. It raises the bar on what’s possible while simultaneously lowering the compute barriers needed to achieve it, paving the way for more players to enter the field with competitive models.

The Distillation Frontier

Another critical competitive front is emerging around model distillation, particularly within the open-source and self-hosted model ecosystem. The race to develop smaller, more efficient models that retain much of the performance of their larger counterparts is accelerating. These compact models not only reduce costs and improve speed but also unlock new deployment scenarios and applications. While frontier labs prioritize advancing raw capabilities, companies focused on practical deployments are betting that achieving scalable distillation could open entirely new markets and use cases.

The ability to run powerful models locally, whether for privacy, latency, or cost reasons, represents a distinct value proposition from cloud-based API services. This creates interesting dynamics where companies might leverage frontier models for training but compete on their ability to deliver compressed, specialized versions for specific applications.

Policy and Regulatory Landscape

Global AI policy frameworks are undergoing a significant recalibration. What began with targeted export controls on advanced chips has expanded into a complex network of regulatory measures that could redefine the industry’s future.

Export controls, however, are not having their intended effect. Restrictions on advanced semiconductors have slowed some development paths but accelerated others. DeepSeek’s efficiency innovations demonstrate how hardware constraints can fuel software breakthroughs. This presents a key dilemma for policymakers: how do you regulate technological progress when limitations themselves become a catalyst for innovation?

The debate around open source AI has reached a critical juncture. DeepSeek’s recent release underscores growing tensions between innovation and control. Advocates of unrestricted open source development argue for faster progress, broader access, and stronger security through transparency. However, national security concerns are increasingly challenging this perspective. Once these techniques are publicly released, efforts to contain them become nearly impossible.

We are likely to see TikTok-style regulatory measures applied to AI models and infrastructure. Similar to how TikTok faces scrutiny over data flows and algorithmic influence, AI models developed or operated by companies linked to strategic competitors are likely to come under increased regulatory attention. Yet regulating AI presents unique challenges. Models trained in one jurisdiction can be deployed globally, and efficiency advancements—such as DeepSeek’s innovations—make localized deployment more viable than ever.

Regulatory bodies face a tough balancing act: staying ahead in the AI race without stifling innovation. Europe’s experience offers a cautionary tale. Despite ambitious plans and a robust talent pool, the EU’s regulatory-first approach has created barriers that hinder AI development, potentially widening the gap with the US and China. For instance, France’s Mistral AI led with its Mixtral model in late 2023, applying the Mixture of Experts (MoE) technique to develop scalable, efficient AI systems. Yet despite this progress, the EU's regulatory complexity risks delaying future innovations. The AI Act, intended to promote safe and ethical AI, imposes high compliance costs, legal ambiguity, and administrative burdens that can deter investment and slow experimentation, particularly for startups and small-to-medium enterprises (SMEs). As a result, many European companies are forced to choose between regulatory compliance and staying competitively relevant, while counterparts in the US and China push forward with fewer constraints.

This evolving regulatory asymmetry is becoming a crucial factor. While Western nations debate tighter AI restrictions, other regions are advancing under different frameworks. This divergence is not just creating parallel development paths; it is challenging traditional notions of how technology control regimes operate in an era of distributed innovation.

The key question is no longer whether more regulation is coming, but whether traditional approaches can meaningfully influence AI development in a world where breakthroughs can emerge from unexpected places with limited resources. Since we are facing the potential control of Artificial Super Intelligence, the stakes have arguably never been higher. Policymakers will need to innovate just as creatively as the technologies they aim to govern, or choose to simply stay out of the way.

Strategic Moves and Market Evolution

DeepSeek's initial success points to several clear next steps. Their efficient reasoning architecture opens new possibilities for model deployment and development strategies.

Their distillation achievements are particularly notable. By compressing advanced reasoning capabilities into smaller models, DeepSeek provides a path for local deployment of cognitive capabilities. The timing is significant: just as OpenAI launches Operator for cloud-based agent architectures, DeepSeek shows that powerful reasoning engines could run locally. This directly challenges the centralized agent paradigm OpenAI is betting on.

The vision of powerful local agents isn't new, but DeepSeek's breakthrough makes it viable. What was a theoretical future, local agents with reasoning capabilities approaching their cloud-based siblings, is now an engineering challenge. Their architecture shows that local deployment requires rethinking how we build intelligent systems. And yet, ironically, this will only accelerate GPU demand. As companies race to train these efficient models and their variants, they'll be snatching up Blackwell chips faster than NVIDIA can manufacture them.

Europe's absence from these developments is notable. DeepSeek's efficiency breakthroughs show that massive compute isn't the only path to competitive AI capabilities. European companies, despite regulatory headwinds, have a track record for building competitive systems under constraints and must act fast to stay relevant and re-enter the conversation.

2025 market indicators point to:

Faster model deployment cycles driven by efficiency gains, and increased global competition

New approaches to training data beyond traditional sources

Efficiency improvements across training and deployment

Downward pressure on pricing as performance-per-dollar becomes critical

A shift from benchmark performance to real-world verification and generalizability

Traditional benchmarks are becoming less relevant. They reflect training optimization more than actual capability and ability to generalize. The industry needs new frameworks for measuring AI performance, especially for reasoning and agent systems.

DeepSeek will likely focus on:

Domain-specific reasoning engines

Improvements in architecture that further reduce compute needs and improve model distillation

Potential expansion into multi-modality

Advances in agent capabilities built on their reasoning capabilities

Partnerships that expand their training data and compute capacity

Now the question is how quickly will we see these changes unfold. We're entering an era where efficient innovation, not just massive scale, drives AI progress.

Beyond the Beep

DeepSeek’s efficiency breakthrough represents a pivotal moment for the AI industry, but it is only the beginning of a broader transformation. The past decade focused on scaling models to unprecedented sizes, but the future will reward those who can not only scale up, but also prioritize smarter, more efficient systems. These smarter systems will include models that can dynamically adapt to real-time feedback, optimize for specific tasks rather than general performance, and utilize techniques like MoE and model distillation to reduce resource demands. They will emphasize enhanced reasoning capabilities, improvements to chain-of-thought problem solving, and energy-efficient operations that integrate seamlessly with edge computing and distributed inference strategies.

We are now entering an era defined by four critical forces: efficiency innovations, evolving regulatory frameworks, data scarcity, and dynamic market competition. The companies and nations that can balance these pressures will shape the next phase of technological advancement.

Key Takeaways and Predictions

Efficiency-Driven Progress: Innovations in reasoning-focused architectures, model distillation, and resource optimization will enable applications previously deemed infeasible. Organizations should prioritize metrics that measure capability-per-compute rather than raw performance alone.

Infrastructure Adaptation: Companies must develop flexible infrastructure strategies that can rapidly incorporate new optimization techniques, such as distributed workload scheduling, energy-efficient cooling innovations, and model distillation approaches aimed at reducing compute overhead without sacrificing performance. Distributed deployment models and hybrid cloud approaches will become key to gaining a strategic edge.

Data and Regulatory Challenges: Synthetic data generation and enhanced data curation practices will become crucial. Companies will need robust data governance strategies to navigate an increasingly complex legal landscape. Proprietary data marketplaces will also become more lucrative and open up new monetization opportunities for businesses.

Strategic Implications for Stakeholders

Enterprises: Focus on building adaptable AI ecosystems that can quickly adopt emerging efficiency breakthroughs. Identify specific AI capabilities that directly drive business outcomes rather than chasing larger, more complex models.

Policymakers: Develop forward-thinking regulations that balance innovation with security and ethical considerations. Collaboration across borders will be essential to create standards that enable trust and transparency.

Researchers and Innovators: Accelerate research into new model architectures that emphasize reasoning efficiency, multimodal capabilities, and enhanced security. Open collaboration and knowledge sharing will be crucial to overcoming technical and ethical challenges.

Revisiting the Analogy

Just as Sputnik’s launch reshaped global ambitions in space exploration, DeepSeek’s advancements have set off a similar chain reaction in AI development. This moment is about more than technology; it’s about leadership, strategy, and the responsibility to harness these breakthroughs for the benefit of society. The race to build bigger models has given way to a race for intelligence, sustainability, and ethical progress.

The true test is not who can build the largest model, but who can build the smartest, most adaptable system. The next wave of AI development will be defined by those who can balance technical innovation with practical constraints, regulatory compliance with competitive advantage, and efficiency with capability.

The beeps from Sputnik lasted only three weeks, but their impact reshaped human history. DeepSeek’s breakthrough might just do the same for AI. The question now is: are we ready for it?